Build an Azure Sentinel lab - part three: deploying a domain controller using Bicep

Learn how to automatically deploy a domain controller within your Azure Sentinel lab

To be useful, an Azure Sentinel lab must replicate an enterprise network as closely as possible. In the first post of our Azure Sentinel lab-building series, we learned how to automate the deployment of a team lab. In our second post, we learned how to automate user access provisioning. However, to be realistic, we must include an Active Directory Domain Controller.

By including a domain controller (DC), our team will enjoy a realistic, hands-on experience managing and securing centralized authentication, permissions, and user data. The DC will simulate an enterprise environment where users can practice configuring group policies, managing user accounts, and enforcing security protocols.

This will help them learn how critical components like Active Directory function, how to monitor a DC and how to mitigate potential threats like unauthorized access or privilege escalation. By working with a DC, users can develop skills in network defense, incident response, and system administration within a controlled, safe setting.

Prerequisites

Similar to our last post, to successfully follow this article, you’ll need to fulfil three requirements:

- You’ll need the Azure Sentinel lab deployment code developed in the previous post.

- You’ll also need to fulfil the prerequisites outlined in the first post, as they will help you install all necessary tools.

- Finally, ensure you have invited all users into the lab’s Azure tenant. You can follow this Azure tutorial to learn to execute bulk user invites.

Want to quickly deploy your own Sentinel lab? Subscribe to Premium to download a working copy of the code described in this article on demand, whenever you want. You'll deploy your Sentinel lab in no time!

Lab architecture overview

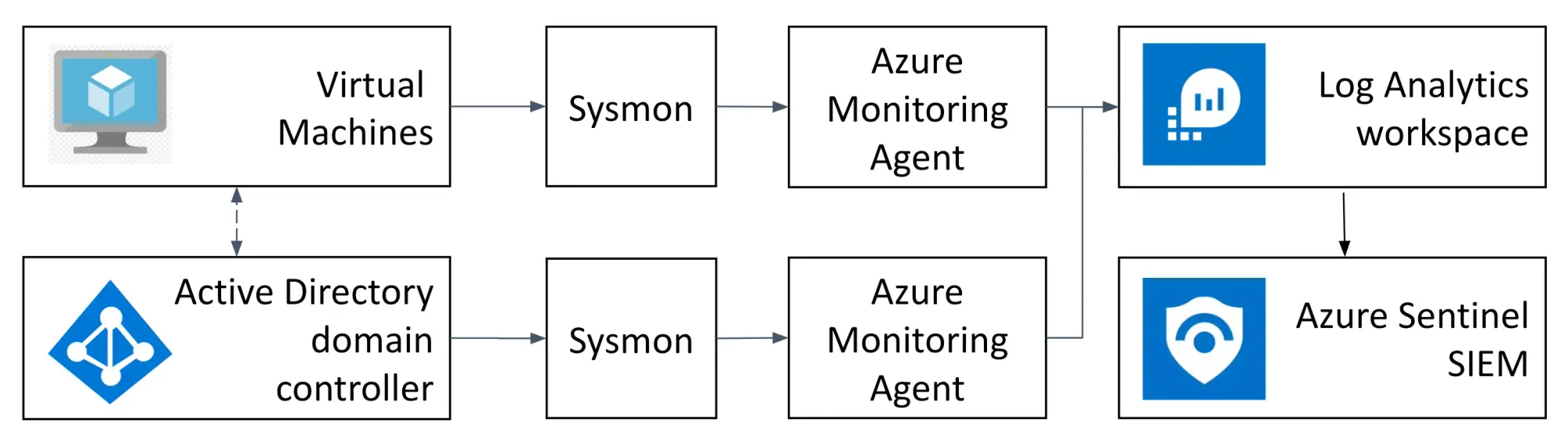

By introducing an Active Directory DC, our lab will increase in complexity and sophistication. The image below provides an overview of the lab’s final architecture design:

The data flow of our Azure Sentinel lab begins with Virtual Machines (VMs) and Active Directory DC, which will act as the key assets in our simulated IT environment. In these systems, we will continue to use Sysmon to capture detailed logs on system activities, such as process creations, network connections, and file modifications.

Similarly, Sysmon will continue to send the collected logs to the Azure Monitoring Agent installed on each machine. This agent acts as a bridge to send data to the Log Analytics Workspace (LAW) in Azure. The LAW will continue to serve as our central repository for monitoring and logging data from our VMs and Active Directory DC.

Once the logs are consolidated in the LAW, they will be forwarded to Azure Sentinel, our lab’s SIEM platform. Within Sentinel, our team can process, analyze and visualize sysmon data for threat detection, investigation and response training purposes.

Deploying the domain controller

To deploy the domain controller, we add two new bicep files to our modules folder. The first one will be titled dc.bicep and will contain the majority of the DC deployment code. We will be reusing most of the code we wrote to deploy workstations.

As a starting point, we’ll add the below parameters to the top of our dc.bicep file:

param location string

param subnetId string

param vmName string

param DcAdminUsername string

@secure()

param DcAdminPassword string

param domainName string

param vmSize string

param userprincipalids object

param dcrid string

param labname string

param configscriptlocation string

Note the addition of the DcAdminUsername, DcAdminPassword and domainName parameters. These will become useful later when we install Active Directory on the DC.

Then, we’ll create the DC’s public IP address and assign all lab users the contributor role to the DC’s public IP address:

// Create the domain controller's public IP address

resource dcpip 'Microsoft.Network/publicIPAddresses@2023-04-01' = {

name: '${vmName}-ip'

location: location

properties: {

publicIPAllocationMethod: 'Dynamic'

dnsSettings: {

domainNameLabel: 'mydc'

}

}

}

// Assign lab users the contributor role to the domain controller's public IP address

resource PipRoleAssignment 'Microsoft.Authorization/roleAssignments@2022-04-01' = [

for i in range(0, length(userprincipalids)): {

scope: dcpip

name: guid(userprincipalids['pc${(i + 1)}'], 'b24988ac-6180-42a0-ab88-20f7382dd24c', dcpip.id)

properties: {

roleDefinitionId: resourceId('Microsoft.Authorization/roleDefinitions', 'b24988ac-6180-42a0-ab88-20f7382dd24c')

principalId: userprincipalids['pc${(i + 1)}']

principalType: 'User'

}

}

]

Then, as done within the vm.bicep code, we’ll create the DC’s Network Interface Card (NIC) and associate it with the applicable public IP address and subnet. After that, we’ll assign lab users the contributor role to the DC’s NIC:

// Create the domain controller's NIC and associate it with the applicable public IP address and subnet

resource dcnic 'Microsoft.Network/networkInterfaces@2023-04-01' = {

name: '${vmName}-nic'

location: location

properties: {

ipConfigurations: [

{

name: 'ipconfig1'

properties: {

subnet: {

id: subnetId

}

privateIPAllocationMethod: 'Dynamic'

publicIPAddress: {

id: dcpip.id

}

}

}

]

}

}

// Assign lab users the contributor role to the domain controller's NIC

resource NicRoleAssignment 'Microsoft.Authorization/roleAssignments@2022-04-01' = [

for i in range(0, length(userprincipalids)): {

scope: dcnic

name: guid(userprincipalids['pc${(i + 1)}'], 'b24988ac-6180-42a0-ab88-20f7382dd24c', dcnic.id)

properties: {

roleDefinitionId: resourceId('Microsoft.Authorization/roleDefinitions', 'b24988ac-6180-42a0-ab88-20f7382dd24c')

principalId: userprincipalids['pc${(i + 1)}']

principalType: 'User'

}

}

]

Finally, we’ll write the code to deploy the domain controller:

resource dc 'Microsoft.Compute/virtualMachines@2023-09-01' = {

name: vmName

location: location

properties: {

hardwareProfile: {

vmSize: vmSize

}

osProfile: {

computerName: vmName

adminUsername: DcAdminUsername

adminPassword: DcAdminPassword

}

storageProfile: {

imageReference: {

publisher: 'MicrosoftWindowsServer'

offer: 'WindowsServer'

sku: '2019-Datacenter'

version: 'latest'

}

osDisk: {

name: '${vmName}-osdisk'

caching: 'ReadOnly'

createOption: 'FromImage'

managedDisk: {

storageAccountType: 'StandardSSD_LRS'

}

}

dataDisks: [

{

name: '${vmName}-datadisk'

caching: 'ReadWrite'

createOption: 'Empty'

diskSizeGB: 20

managedDisk: {

storageAccountType: 'StandardSSD_LRS'

}

lun: 0

}

]

}

networkProfile: {

networkInterfaces: [

{

id: dcnic.id

}

]

}

}

}

While the above code looks similar to the code in vm.bicep, there are differences between the two. First, the code for our DC contains hardcoded values for the image reference, helping us deploy a Windows Server 2019 Datacenter. Secondly, our DC code defines a data disk for the AD database files with a custom name, caching set to ReadWrite, size of 20GB and a logical unit (LUN) with a value of 0.

Finally, you’ll notice that several parameters within our DC deployment code are hardcoded. This is because we do not need the same level of flexibility and reusability as with the code used in vm.bicep.

We’ll also add the virtual machine extensions to our dc resource. These will install Sysmon and the Azure Monitoring Agent on the domain controller:

// Install Azure Monitor Agent on the VM

resource ama 'extensions@2023-09-01' = {

name: 'ama-deploy'

location: location

properties: {

publisher: 'Microsoft.Azure.Monitor'

type: 'AzureMonitorWindowsAgent'

typeHandlerVersion: '1.0'

autoUpgradeMinorVersion: true

enableAutomaticUpgrade: true

}

}

// Execute the VM post deployment configuration

resource vme1 'extensions@2023-09-01' = {

name: 'sysmon-deploy'

location: location

properties: {

publisher: 'Microsoft.Compute'

type: 'CustomScriptExtension'

typeHandlerVersion: '1.10'

autoUpgradeMinorVersion: true

protectedSettings: {

fileUris: [

'${configscriptlocation}'

]

commandToExecute: 'powershell -ExecutionPolicy Unrestricted -File win10-vm-sysmon-post-deployment-config.ps1'

}

}

}

Deploying Active Directory

To deploy Active Directory on the DC, we’ll be adding a third, specialised extension:

// Execute the VM post deployment configuration

resource vme2 'extensions@2022-08-01' = {

name: 'ad-deploy'

location: location

properties: {

publisher: 'Microsoft.Powershell'

type: 'DSC'

typeHandlerVersion: '2.19'

autoUpgradeMinorVersion: true

settings: {

ModulesUrl: 'https://github.com/blogonsecurity/vm-scripts/raw/refs/heads/main/CreateADPDC.zip'

ConfigurationFunction: 'CreateADPDC.ps1\\CreateADPDC'

Properties: {

DomainName: domainName

AdminCreds: {

UserName: DcAdminUsername

Password: 'PrivateSettingsRef:AdminPassword'

}

}

}

protectedSettings: {

Items: {

AdminPassword: DcAdminPassword

}

}

}

}

The extension employs an Azure Desired State Configuration (DSC) extension to deploy Active Directory. DSC is a configuration management tool ensuring that virtual machines (VMs) or other resources remain in a specific, predefined state. DSC is part of Windows PowerShell and enables the automatic setup, configuration, and management of both Windows and Linux environments. It is useful for maintaining consistency, reducing configuration drift, and automating infrastructure as code deployment.

Note the ModulesUrlparameter in the DSC’s settings. It references an AD deployment DSC called CreateADPDC.zip. The zip file contains the DSC script, which is taken from Azure’s quickstart deployment template for Active Directory.

You can review the internals of the DSC script by following the above link. However, for this blog post, it is sufficient to know that the DSC script configures a local machine as a primary domain controller (PDC) for an Active Directory (AD) domain.

As part of its logic, it installs the DNS Server role, configures the Azure Guest Agent to depend on DNS, and sets the DNS server to use the machine’s loopback address. It also enables DNS diagnostics and installs Active Directory Domain Services (ADDS) and related tools.

Additionally, the script waits for Disk 2, formats it, and assigns it as the drive for the AD database files. Finally, it sets up the machine as the first domain controller in a new domain, configuring paths for the AD database, logs and AD System Volume. This ensures the complete setup of the AD environment with DNS and disk configuration.

As an additional step, we must connect the DC’s Azure Monitor Agent to the Sysmon data collection rule defined in main.bicep. We must also assign lab users the contributor role to the DC:

// Connect the Domain controller's Azure Monitor Agent to the Sysmon data collection rule

resource workstationAssociation 'Microsoft.Insights/dataCollectionRuleAssociations@2022-06-01' = {

name: '${vmName}-ra'

scope: dc

properties: {

dataCollectionRuleId: dcrid

}

}

// Assign lab users the contributor role to virtual machine

resource DcRoleAssignment 'Microsoft.Authorization/roleAssignments@2022-04-01' = [

for i in range(0, length(userprincipalids)): {

scope: dc

name: guid(userprincipalids['pc${(i + 1)}'], 'b24988ac-6180-42a0-ab88-20f7382dd24c', dc.id)

properties: {

roleDefinitionId: resourceId('Microsoft.Authorization/roleDefinitions', 'b24988ac-6180-42a0-ab88-20f7382dd24c')

principalId: userprincipalids['pc${(i + 1)}']

principalType: 'User'

}

}

]

To complete our DC deployment script we must update the lab’s DNS to point to our newly deployed Active Directory server. This is done with an additional Bicep module called vnet-with-dns-server.bicep. We must add this module to our modules folder.

The module code defines a virtual network with a more flexible configuration. It uses parameterized variables allowing for the customization of the VNet’s name, address range, subnet, and DNS server settings at deployment. It also includes a dhcpOptions block to configure the lab’s DNS servers to point to our DC. The full code looks as follows:

param virtualNetworkName string

param virtualNetworkAddressRange string

param subnetName string

param subnetRange string

param DNSServerAddress array

param location string

resource virtualNetwork 'Microsoft.Network/virtualNetworks@2022-07-01' = {

name: virtualNetworkName

location: location

properties: {

addressSpace: {

addressPrefixes: [

virtualNetworkAddressRange

]

}

dhcpOptions: {

dnsServers: DNSServerAddress

}

subnets: [

{

name: subnetName

properties: {

addressPrefix: subnetRange

}

}

]

}

}

After creating the module to update the lab’s DNS server, we must invoke it within the dc.bicep. This is done as follows:

module updateVNetDNS 'vnet-with-dns-server.bicep' = {

scope: resourceGroup()

name: 'dns-update'

params: {

virtualNetworkName: '${labname}-vnet'

virtualNetworkAddressRange: '10.0.0.0/16'

subnetName: '${labname}-subnet'

subnetRange: '10.0.0.0/24'

DNSServerAddress: [

'10.0.0.4'

]

location: location

}

dependsOn: [

dc

]

}

The above bicep code will automatically deploy a DC and its associated resources. More importantly, it will ensure that the DC is monitored using Sysmon. Finally, it will automatically assign the required permissions for the users to access the lab’s DC.

Joining the VMs to the domain

To complete the deployment of our DC, we will need to upgrade the code in the vm.bicep file. Specifically, we will add an extension within the vm resource to ensure every VM joins the lab’s domain after the deployment. The extension code looks like this:

resource vme2 'extensions@2022-08-01' = {

name: 'ad-join'

location: location

properties: {

publisher: 'Microsoft.Powershell'

type: 'DSC'

typeHandlerVersion: '2.19'

autoUpgradeMinorVersion: true

settings: {

ModulesUrl: 'https://github.com/blogonsecurity/vm-scripts/raw/refs/heads/main/Join-Domain.zip'

ConfigurationFunction: 'Join-Domain.ps1\\Join-Domain'

Properties: {

domainFQDN: domainName

computerName: vmName

adminCredential: {

UserName: DcAdminUsername

Password: 'PrivateSettingsRef:AdminPassword'

}

}

}

protectedSettings: {

Items: {

adminPassword: DcAdminPassword

}

}

}

}

This code deploys another DSC extension that automatically joins the VM to an Active Directory domain. The extension downloads a DSC module (Join-Domain.zip) from GitHub and executes the Join-Domain.ps1 script.

Additionally, the code uses the specified fully qualified domain name (FQDN), the virtual machine’s name and the provided administrative credentials (username and password) to join the VM to the domain. Note that the password is stored in the protected settings to ensure security.

Finally, we must update the parameters at the top of the vm.bicep file, like so:

// Append the below parameters

// after all the others

param domainName string

param DcAdminUsername string

@secure()

param DcAdminPassword string

Putting everything together

To complete our domain controller deployment code we must update the main.bicep file. First, we must include the parameters and resources related to the domain deployment logic allowing workstations to join the lab’s domain. Specifically, we’ll need to introduce three new parameters at the top of main.bicep called dcadminusername, dcadminpassword, and domainName

param location string

param labname string

param userprincipalids object

param vmusername string

param dcadminusername string // new parameter

@secure()

param vmpassword string

@secure()

param dcadminpassword string // new parameter

param vmostag string

param vmsize string

param vmoffer string

param vmpublisher string

param vmsku string

param configscriptlocation string

param domainName string // new parameter

param logAnalyticsWorkspaceRetention int

param logAnalyticsWorkspaceDailyQuota int

Consequently, we must update the lab’s main configuration script (main.parameters.json) with new parameters. Specifically, we’ll need to add the below json snippet to our configuration file:

{

// Append the below values to main.parameters.json

"dcadminusername": {

"value": "" // DC username value

},

"dcadminpassword": {

"value": "" // DC administrator password value

},

"domainName": {

"value": "" // DC domain e.g. soclab.local

}

}

Once the parameters have been updated across both files, we need to add the DC deployment code to main.bicep. This is done like so:

// Deploy domain controller

module dc 'modules/dc.bicep' = {

name: '${labname}-dc-${vmostag}'

params: {

domainName: domainName

location: location

subnetId: virtualNetwork.properties.subnets[0].id

vmName: '${labname}-dc-${vmostag}'

vmSize: vmsize

DcAdminUsername: dcadminusername

DcAdminPassword: dcadminpassword

dcrid: dcr.id

userprincipalids: userprincipalids

labname: labname

configscriptlocation: configscriptlocation

}

}

The final step is to update the VM deployment code to ensure the DcAdminUsername, DcAdminPassword and domainName are supplied:

// Deploy workstations for each lab user

module workstations 'modules/vm.bicep' = [

for i in range(0, length(userprincipalids)): {

dependsOn: [

dc

]

name: '${labname}-pc${(i + 1)}-${vmostag}'

params: {

// All other vm parameters are here

// Append the below parameters after

// all the others

DcAdminUsername: dcadminusername

DcAdminPassword: dcadminpassword

domainName: domainName

}

}

]

Conclusion

Our lab deployment code has now been updated to automatically deploy a domain controller and join all virtual machines to it. These updates hugely enhance our lab’s infrastructure and further increase the training possibilities within it. Your team will now be able to practice both offensive and defensive techniques in a lab that closely resembles an enterprise network.

With the DC deployment updates, we can now run the same commands to deploy our lab. As always, we create a resource group and deployment region within which to provision our lab:

az group create --name [INSERT YOUR RESOURCE GROUP] --location [INSERT YOUR DEPLYOMENT REGION]

Then, we run the below deployment command:

az deployment group create --resource-group [INSERT YOUR RESOURCE GROUP] --template-file main.bicep --parameters '@main.parameters.json'

Finally, to destroy the lab, we run this command:

az group delete --name [INSERT YOUR RESOURCE GROUP]

In the next part of this series, we will improve the ingestion and parsing of Sysmon data and we’ll examine techniques to simplify the writing of Sysmon detection techniques.