Creating an LLM AI security checklist for rapid fieldwork use

Learn how security teams can help companies safely adopt LLM AIs by using a fieldwork checklist based on OWASP

In 2023 the technology industry experienced a surge of open source LLM models being released. In the first half of 2024, many companies are now getting their hands on these open-source LLM models looking for ways to integrate them in their products and processes.

While the availability of open-source LLMs opens exciting possibilities for companies worldwide, for many security teams this poses a challenge: introducing these LLMs in a safe and compliant way within company products or processes.

Today, there exists little official guidance covering the safe introduction of LLMs within businesses. Furthermore, the literature that exists is still being actively developed. As a result, it is scattered across several initiatives managed by different companies and institutions.

Given this context, security teams could benefit from a checklist that unifies all the available LLM guidance into a single document to use when reviewing company LLM projects. In this article, we’ll carry out a first attempt to create such a checklist.

Getting started

Any online search for LLM cybersecurity checklists will return results from several vendors promoting their checklists. While these checklists are often a great source of guidance, they tend to be specific to the technologies of the vendors promoting them.

However, among the various vendor-sponsored results, you’ll find that in December 2023 OWASP published the first version of its cybersecurity checklist. To date, this represents one of the few open source attempts to codify best practices regarding LLM AI security and governance.

The document aggregates thirteen areas that security teams should evaluate when it comes to LLM security and governance. OWASP derived its checklist from materials and best practices gathered through its AI exchange initiative. This initiative seeks to advance the development of AI security standards and regulations by providing an independent repository of best practices for AI security, privacy and governance.

While OWASP’s AI exchange is still an early-stage initiative, it has already yielded useful guidance for security teams. The ML Top 10 and LLM Top 10 vulnerability guides, in particular, should be bookmarked by any security professional currently working or advising on AI security.

Reviewing the OWASP checklist

At 32 pages in length, the OWASP checklist is a lengthy document. It starts with an overview of the different types of AIs available on the market today and introduces the challenges of responsibly building LLMs that are secure, trustworthy and compliant. In addition, the introduction outlines current LLM threat categories as well as the fundamental security principles that should underpin any LLM implementation effort. Finally, the introduction concludes with a very useful overview of LLM deployment strategies.

The thirteen areas covered by the OWASP checklist include:

-

Adversarial risk: considers threats from both the perspective of competitors and attackers. Here, security teams should help the organisation review the effectiveness of current security controls, especially those that might be vulnerable to advanced AI-powered attacks. Finally, OWASP recommends to update incident response plans to specifically address these new threats, including attacks enhanced by AI

-

Threat modelling: this section emphasizes the importance of threat modelling to proactively address security risks associated with Generative AI (GenAI) and Large Language Models (LLMs). Here, the checklist outlines areas to consider such as how attackers might leverage GenAI for personalized attacks, spoofing, and malicious content generation. It also emphasizes the need for robust defenses to detect and neutralize harmful inputs, secure integrations with existing systems, prevent insider threats and unauthorized access, and filter out inappropriate content generated by LLMs

-

AI asset inventorying: here OWASP outlines the key steps for building a comprehensive AI asset inventory. It suggests creating a catalog of AI services, tools, and their owners, including them in the software bill of materials (SBOM), and identifying the data sources used by the AI along with their sensitivity. Additionally, it recommends evaluating the need for penetration testing and establishing a standardized onboarding process for new AI solutions. Finally, it highlights the importance of having skilled IT staff available to manage these assets effectively

-

AI security and privacy: this checklist focuses on building employee trust and awareness regarding LLMs within the company. It recommends training employees on ethics, responsible use, and legal considerations surrounding LLMs. Additionally, it suggests checking if security awareness training should be updated to address new threats like voice and image cloning, and the increased risk of spear phishing attacks facilitated by LLMs. Finally, the checklist recommends specific security training for DevOps and engineering teams involved in deploying AI solutions

-

Business case definition: under this aspect, OWASP recommends that businesses define solid business cases behind their planned adoption of AI or LLMs. Here, specific use cases are provided for consideration such as customer experience enhancement, better knowledge management and streamlining of business operations

-

Governance aspects: under this area, OWASP recommends the definition of checks and controls for establishing strong corporate governance around LLMs. It emphasizes the importance of defining clear roles and responsibilities by creating an “AI RACI chart” that identifies who is accountable, responsible, consulted, and informed within the organization. Additionally, it highlights the need to develop dedicated risk assessments, data management policies and an overarching AI policy. Finally, the checklist emphasizes data security by requiring data classification and access limitations for LLM training and usage as well as an acceptable use policy for employees interacting with generative AI tools

-

Legal aspects: this checklist highlights the various legal considerations surrounding LLMs. Key areas suggested for review include product warranties, user agreements (EULAs) for both the organization and its customers, data privacy, intellectual property ownership of AI-generated content, and potential liabilities for issues like plagiarism, bias, or copyright infringement. The checklist also recommends reviewing contracts with indemnification clauses, insurance coverage for AI use, and potential copyright issues. Finally, it suggests restricting employee or contractor use of generative AI tools in situations where rights or intellectual property ownership could be at risk, and ensuring compliance with data privacy regulations when using AI for employee management or hiring

-

Regulatory aspects: this checklist instead emphasizes the need to identify relevant regulations by location and assess how AI tools used for employee management or hiring comply with these regulations. It highlights the importance of reviewing vendor practices for training data, bias mitigation, data security, and responsible use of facial recognition

-

Implementation and usage aspects: under this area, OWASP provides a checklist to review data security measures, access control, training pipeline security, and input/output validation. It highlights the importance of monitoring, logging, auditing, and penetration testing to identify and address vulnerabilities. The checklist also focuses on supply chain security by recommending audits and reviews of third-party vendors

-

Testing, evaluation, verification and validation: in this area OWASP provides a checklist to implement adequate Testing, Evaluation, Verification, and Validation (TEVV) processes

-

Transparency, accountability and ethical aspects: here OWASP covers the important role that model cards and risk cards play in ensuring the ethical and responsible use of LLMs. Model cards provide transparency by documenting the model’s design, capabilities, and limitations. This allows users to understand and trust the AI system, leading to safe and informed applications. Risk cards complement this by proactively addressing potential issues like bias, privacy, and security vulnerabilities. Here OWASP provides a checklist that helps ensure that these cards are made available to developers, users, regulators, and ethicists, fostering collaboration and careful consideration of AI’s social impact. Finally, the checklist recommends reviewing model cards and risk cards whenever available, and establishing a system for tracking and maintaining these cards for all deployed models, even those obtained through third parties

-

LLM optimisation: here OWASP’s’ checklist discusses two methods for improving the performance of LLMs: fine-tuning and Retrieval-Augmented Generation (RAG). It provides two checklist items for security to consider RAG examples as well as pain points and proposed solutions

-

AI red teaming: in this last area, the checklist recommends incorporating Red Team testing as a standard practice for AI Models and applications. However, OWASP here cautions that red teaming should be used alongside other methods like algorithmic impact assessments and external audits for a more comprehensive evaluation

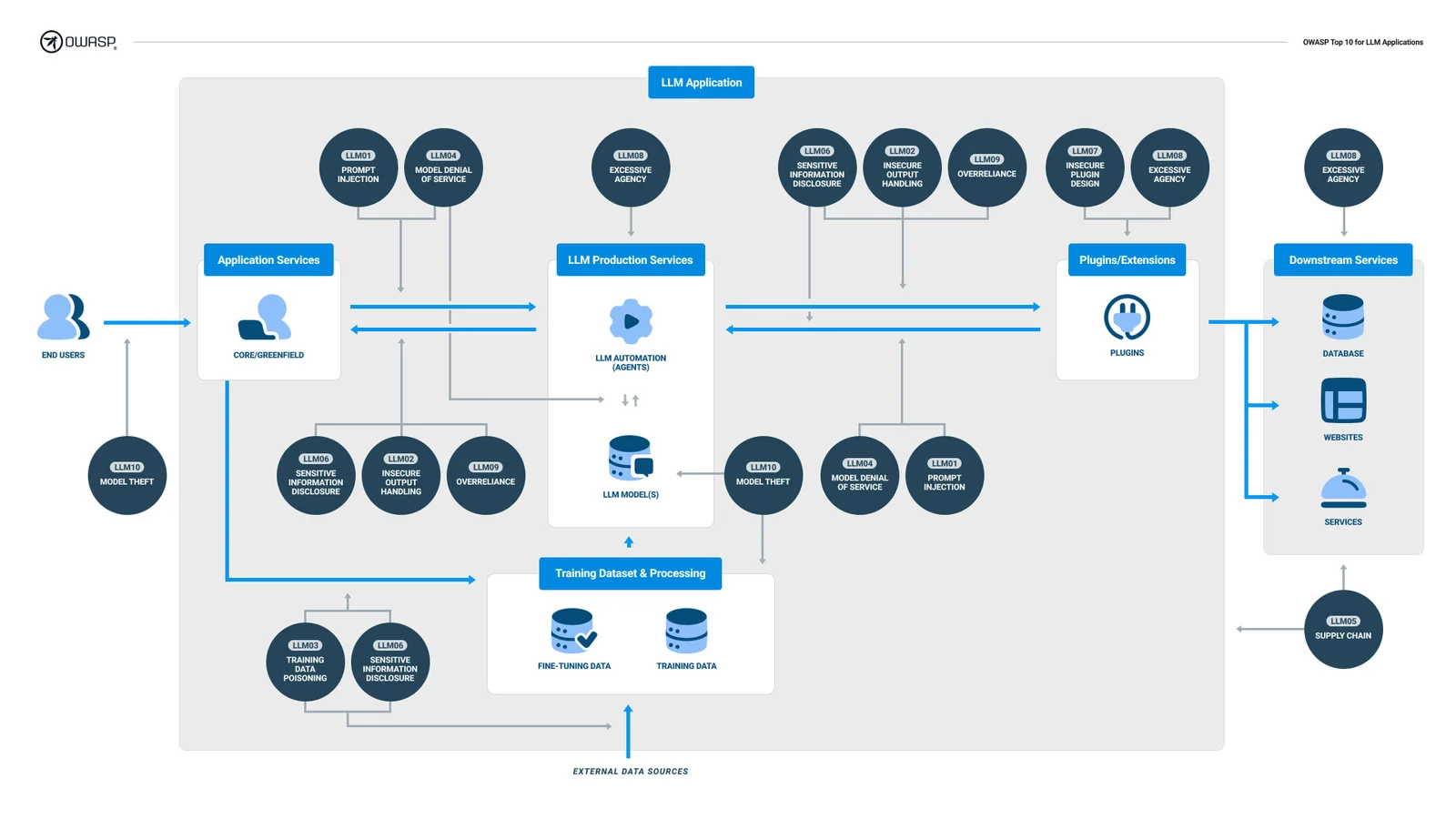

In the last ten pages, OWASP provides a comprehensive list of additional resources to supplement the checklist. Worthy of note is the inclusion of OWASP’s TOP 10 LLM vulnerabilities in poster format. This poster is a great asset for security teams to use when needing to provide rapid guidance to development teams. Especially in the early threat modelling and feature design phases, as seen below:

Finally, OWASP also provides links to further AI security materials developed by MITRE as well as links to AI vulnerability databases and procurement guidance from the World Economic Forum.

Improvement opportunities

There is no denying that the OWASP checklist is a great source of information in an area affected by a general lack of useful, neutral guidance. Once again, OWASP fulfills a critical need with an excellent product. With this checklist, it paved the way for establishing best practices in a bleeding-edge security area. Having said that, there are at least three improvable aspects that jump out.

First, the checklist is the first version destined to go through multiple updates. Besides the obvious typos here and there, the overall document structure has room for improvement. For example, the thirteen areas of the checklist are grouped by logical topic (i.e. Adversarial risk, threat modelling, legal etc.). This is not necessarily wrong. However, to increase immediate usability, it might be better to group checklists by capability area (i.e. protect, detect, respond). This would help security professionals intuitively understand how individual checklists help with strengthening specific security capabilities within the company.

Secondly, the document’s length does not make it optimal for rapid fieldwork use. This is also further exacerbated by the first issue described above. When using the document, it’s not uncommon to find oneself scrolling up and down the document to find the needed checklist buried deep within one of the thirteen logical topics. A checklist should be rapidly usable during meetings, conference calls and brainstorming sessions. A more compact and intuitive format is required for fieldwork use.

Finally, some areas of the checklist do not appear to be related to security (i.e. business case checklist). This is in keeping with OWASP’s aim to deliver a checklist usable across various stakeholder groups, including executive management. While this is not necessarily wrong, it justifies the inclusion of topics that are not security-specific, thereby increasing the scope and volume of content. As a result, some checklists may not be useful to security teams tasked with delivering quick and effective LLM AI risk management advice.

Building a fieldwork checklist

To build an LLM AI checklist for rapid fieldwork use it makes absolute sense to start from the OWASP checklist. To address the above improvement points and increase usability in the field we could map the individual OWASP checklists to NIST by using OWASP TASM.

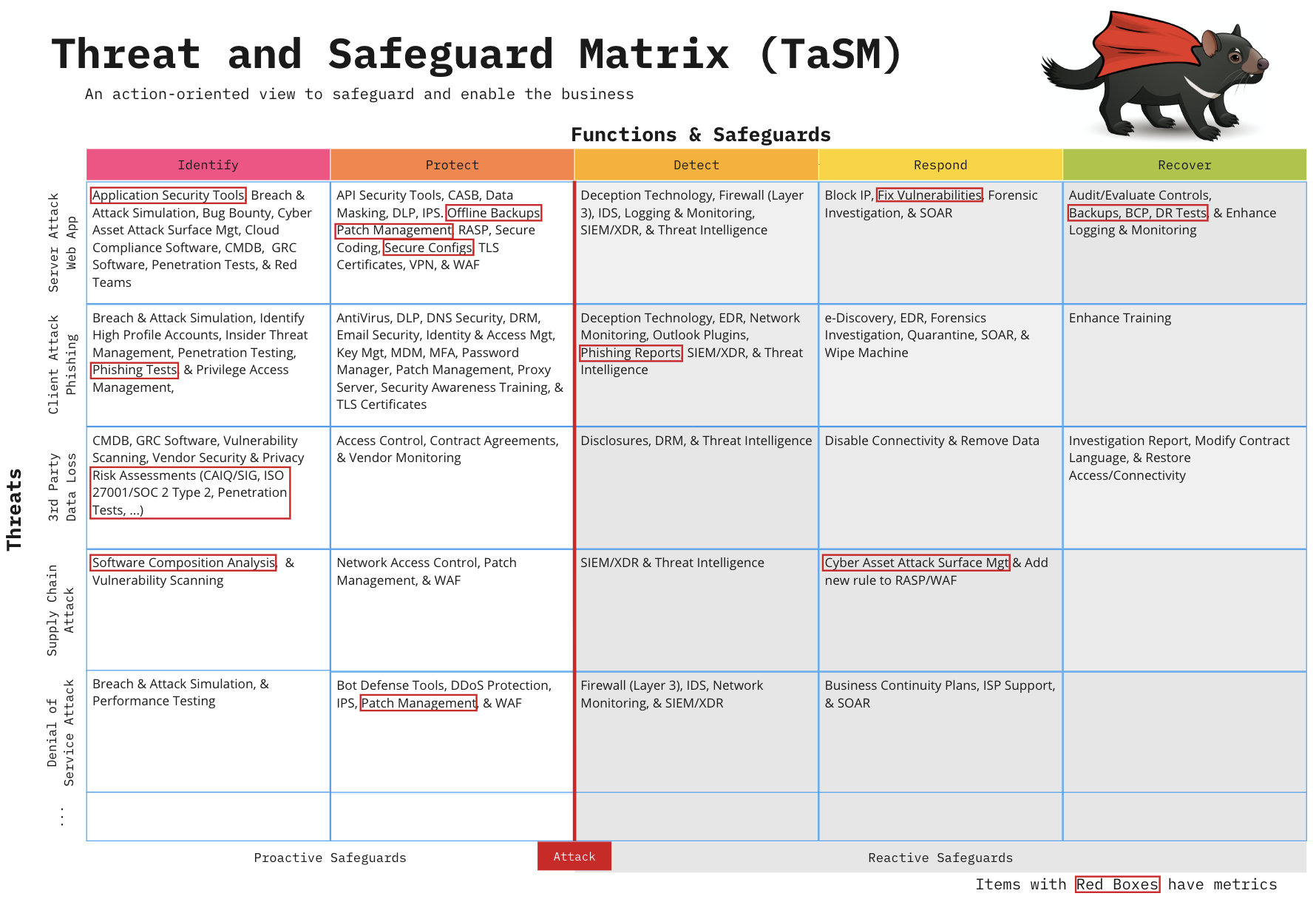

TASM, which stands for Threat and Safeguard Matrix, was created by OWASP to provide security teams (and CISOs) with an action-oriented view to safeguard and enable the business. While TASM was created primarily as a means to create a strategic, high-level action plan to counter several threats against a business, it can be used to target a single security threat.

By looking at the image above, we can see how usability would increase by mapping the OWASP checklists to the various NIST functions and safeguards. Using TASM as a starting point we can arrange the thirteen OWASP checklists as follows:

- Govern: do we have a strategy to manage AI LLM threats?

- Governance checklist

- AI security and privacy checklist

- Identify: do we understand AI LLM threats?

- AI asset inventory checklist

- Legal risk checklist

- Regulatory risk checklist

- Protect: can we mitigate AI LLM threats?

- Threat modelling checklist

- Implementation and usage checklist (some parts)

- OWASP LLM TOP 10 checklist

- Detect: can we detect AI LLM threats?

- Implementation and usage checklist (some parts)

- Testing, evaluation, verification and validation checklist

- Respond: can we contain AI LLM threats?

- Implementation and usage checklist (some parts)

- Recover: can we recover from trouble?

- Checklist based on Google IMAG and Bill Swearingen’s Awesome Incident Response Guide

After mapping and rearranging the individual checklists, we can condense everything into a one-page document using a format that helps readers with retrieving information rapidly.

Conclusion

The OWASP LLM AI security and governance checklist is a brilliant document that brings much-needed guidance to the field of AI security. As we have seen, its current format makes it difficult to consume and use rapidly. However, by taking inspiration from OWASP TASM, we can use an approach that extracts the essence of individual checklists and maps them to the NIST CSF capabilities. The result is a checklist that can be rapidly used by security professionals to provide reliable and effective advice across several stakeholder groups in their company.